Mathigicians: the fraud of ‘Dark Energy’. Magic fairy dust to balance equations.

A completely incoherent variable, inserted as a parameter to make equations balance and satisfy Big Bang-philosophical objectives.

“Many cosmologists advocate reviving [Einstein’s] cosmological constant term on theoretical grounds, as a way to explain the rate of expansion of the universe….The main attraction of the cosmological constant term is that it significantly improves the agreement between theory and observation….

For example, if the cosmological constant today comprises most of the energy density of the universe, then the extrapolated age of the universe is much larger than it would be without such a term, which helps avoid the dilemma that the extrapolated age of the universe is younger than some of the oldest stars we observe!” (NASA, often confused with a film agency, “Dark Energy: A Cosmological Constant?” http://map.gsfc.nasa.gov/universe/ uni_matter.html)

But

“Additionally, we must take seriously the idea that the acceleration apparently indicated by supernova data could be due to large scale inhomogeneity with no dark energy. Observational tests of the latter possibility are as important as pursuing the dark energy (exotic physics) option in a homogeneous universe.… because of the foundational nature of the Copernican Principle for standard cosmology, we need to fully check this foundation.

And one must emphasize here that standard CMB anisotropy studies do not prove the Copernican principle: they assume it at the start……then uses some form of observationally-based fitting process to determine its basic parameters” (“Inhomogeneity effects in Cosmology,” George F. R. Ellis, March 14, 2011, University of Cape Town, pp. 19, 5; http://arxiv.org/pdf/1103.2335.pdf).

The confusionists and ‘science’. NASA and its deep state-financed organs of ‘the science’ maintain that ‘dark energy’ must be real or else they are faced with a younger universe. Observational evidence pace Ellis in the 2nd quote, indicates that dark energy is a phantasm, premised on philosophical foundations and biases.

“…do not prove the Copernican principle: they assume it at the start.”

Indeed, philosophy and tautology inform interpretations. The mathigicians can now enter and perform their necromancy. The graphic designers and image propagandists will then take over and assemble the evocative pictures and visualisations. They will have a line pointing to a black area on an image with the notation ‘Black Hole here’. Another arrow will connect to a region on the image and name it ‘Dark Energy’. You will be convinced.

Mathigicians

The ‘dark energy-ists’ of course invoke Saint Einstein and his maths to ‘save the phenomena’. This is ignorance of course.

To his credit Einstein never supported Black Holes and he never supported a fictitious force like ‘dark energy’.

His constant, which could be considered to be equal to Dark Energy + Dark Matter, did not specify either. One obvious reason is that when you add the necessary ‘Dark Energy maths’ to the Einstotle’s equations to account for the specter of an unknown energy source, they don’t work (more below)! You are never told this.

Dark Energy is simply an inserted and calibrated parameter within the Big Bang cosmology. Like Dark Matter, the mathigicians cannot see, hear, feel, taste or touch it, but they ‘know’ it is there. Why? Because the acceleration needed for the Big Bang expansion could not occur without it.

Consider this. You enjoy cycling. You decide you will cycle 100 km. You are fit enough to do only 50 km and stop after 50 km. You go back home tired and disappointed. You sit at your desk and torture maths equations until they scream that the 50 km is really 100 km. You convince yourself that you really cycled 100 km. Mathematically you converted the 50 km into a 100 km and it now becomes a fact.

Einstein’s classical General Relativity can only account for ~4% of the universe.1 In essence the mathigicians have tortured geometry to come up with the other 96%.

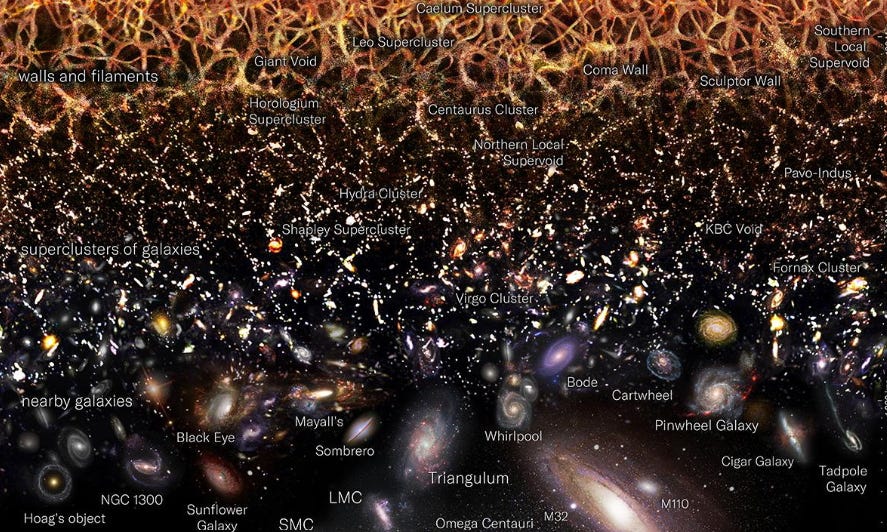

The wizards claim that the universe is 96% Dark Energy and Dark Matter. This is a very recent (less than 40 years old) modelling addition, necessitated by their philosophy of a curved space time and endless universal acceleration. This new, unproven, mathematical construct is always presented as ‘fact’.

However, given that the Einstotle’s General Theory of Relativity and especially his ‘curved space-time’ with gravitational ‘waves’, are the bedrock of the Big Bang, the mathigicians have a problem. The equations don’t work.

So, when in doubt imitate the Rona Totalitarians and inject a few boosters.

There was a booster shot called ‘Lambda’, which was 73% of the 96% missing energy density. Cold Dark Matter was a second stab and assigned to produce 23% of energy density (the current configuration is now 67-73% and 23-29%, ‘science’ is dynamic you know). To make it sciency the 2 booster shots were given acronyms and named LCDM or ΛCDM which stands for Lambda plus Cold Dark Matter.

However, adding the ‘safe and effective’ Lambda to General Relativity’s original tensor equation caused a rather large issue. The mathigicians had to redefine General Relativity, since it does not work with Lambda!

That is, unless Lambda equals zero, which negates their exercise, General Relativity cannot add up its tensors.2

Wizards and exploding fireworks

That is certainly a problem. But not the only one.

Now the mathigicians must calculate the rate of the creative event and original explosion. If this rate is too slow, the universe will go into what is called the ‘Big Crunch’, that is, gravity will pull all the exploding parts back together before it can ‘evolve’ (by random chance, stuff happens), into the organized biophilic system evidenced today (a human connection with nature, with the cosmos, akin to the anthropic principle). If there is too little energy, the universe will not inflate and cannot create complex structures and demonstrate a homogenous radiation background.

If it’s too fast, the universe will be diffuse and will not be able to produce galactic structure and biological life, based as they are in secular theology on the gods of gravity, endless time and evolution. Too much energy and the universe will not properly and coherently expand and simply explode or degrade into a mess.

Somehow the ‘random chance’ explosion has to be calibrated to the nth degree of perfection.

It is an impossibility in reality. Not only must the rate be 100% perfect but an explanation for the perfection of the rate should be offered.

Why the ‘perfect rate’ and not some other rate? What is the chance of that happening?

Was the rate constant, variant or did it oscillate?

How much matter was ejected? Did this vary, was it all at once, were there many ‘sub explosions’?

Why is the ‘right’ level of material emitted, why not some other level? What is the chance of that happening?

Who or what created and measured the ‘right level’ of material?

How was the ‘anti-matter’ formed?

Why don’t we see anti-matter today? (remember, the only way energy can be equated with matter is when matter and anti-matter combine, Lerner 1991, E=MC2 is wrong and another fiction).

And so on….their only recourse is magic.

Copernicus the Confused

One of the confusing aspects of the Polish monk’s canon was his finite universe. When Newton discovered gravity he realised this was a major issue. Newton understood that gravity within a closed-finite system would eventually pull the stars into one massive ball. In order to compensate for this problem, Newton opted for an infinite universe.

As time went by, ‘the science’ realised there were too many problems with an infinite universe, so Einstein tried to compensate for this by introducing an opposing force, which he called the ‘cosmological constant’. The Einstotle’s constant is the same deployed by Newton to balance universal forces and imitates the combination of dark matter and dark energy.

In 1915, when Einstein developed his General Relativity Theory (GTR), it greatly disturbed him to discover that his geo-metro-dynamic ‘law’ G = 8πT predicts a non-permanent universe; a dynamic universe; a universe that originated in a large irruption. This universe unless it expands, will be destroyed by contraction to infinite density. Faced with this contradiction between his theory and the firm philosophical Newtonian-belief of the day, Einstein weakened and modified his theory.3

In revising his own theory Einstein, faced the same issues as Newton. He reversed the effects of gravity to keep the universe from falling in on itself. The universe would remain static, not expanding or contracting. Einstein was determined to follow Mach’s principle, wherein space was defined by the matter within it. Universal stasis would depend on this finite mass of matter.

However, Wilhelm de Sitter, one of Einstein’s more troublesome disciples, didn’t follow Mach’s rules and created a variation of Einstein’s cosmological constant. De Sitter ignored the matter of the universe principle and concentrated on quantum energy, an energy that would be enough to propel the expansion of the universe and provide a stabilising counter-force to gravity.

By the 1920s the choice was between Einstein’s static but matter-filled universe and de Sitter’s expanding but matter-deficient universe.

It should be noted that in 1905 in his ‘Special Theory of Relativity’, the Einstotle removed the ‘aether’ or a material medium and gravity. In 1915, in his GTR, he reinstated the aether, materiality and gravity (in a spacetime dimension with a gravitational ‘wave’). Suddenly post-1915, the universe was filled with matter. Previously it had lain naked and empty.

Even Einstein had to admit that matter needs a medium in which to transit. So too does light and electromagnetism. So the science ended up combining de Sitter and Einstein - an expanding, material-based (maybe not filled, but not empty) universe.

Endless infinity

To help develop the maths to explain the Einstein-de Sitter universe Alexander Friedmann, a Russian (oh the horror) mathigician, parsed Einstein’s barely understandable equations and eliminated the cosmological constant and produced an expanding universe still under the constraints of GTR.4

To accomplish this Friedmann forced the equations to produce a universe whose matter was spread out evenly and was the same everywhere (i.e., isotropic and homogeneous, key principles of the Big Bang), otherwise known as the ‘cosmological principle’. Forcing equations and answers is a bedrock ‘axiom’ of ‘the science’.

Relativity Apostle and important evangeliser Arthur Eddington pointed out that even with the cosmological constant, an Einstein-universe was not really static or balanced. There were still issues. Since gravity and Einstein’s cosmological constant (Λ) had to be balanced so perfectly (like balancing a sword on its point), even minute fluctuations would produce a runaway expansion or an unstoppable contraction.

The best Friedmann could do was propose a universe with enough matter (what he called ‘the critical density’) that would allow the universe to expand for eternity but at an ever-decreasing rate, even though this solution itself was counterintuitive. Today of course ‘the science’ maintains that the rate of acceleration is increasing and is variable depending on the region of the universe. So much science.

“Einstein first proposed the cosmological constant…as a mathematical fix to the theory of general relativity. In its simplest form, general relativity predicted that the universe must either expand or contract. Einstein thought the universe was static, so he added this new term [(Λ) lambda] to stop the expansion. Friedmann, a Russian mathematician, realized that this was an unstable fix, like balancing a pencil on its point, and proposed an expanding universe model, now called the Big Bang theory” (this quote is from NASA, the space proxy of the US military machine).5

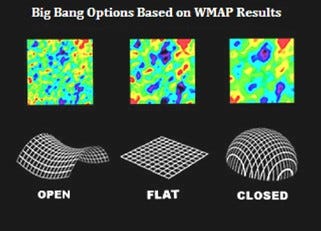

In the 1920s and 30s the excitable Hubble repaired some of the problem by interpreting the redshift of galaxies as a sign that the universe was indeed expanding (this redshift dogma is wrong). This still required that matter in transit remain homogeneous as required by Friedmann’s maths, so that the universe can expand forever (as opposed to curving back in on itself). However, it is now known that the distribution of the cosmic microwave radiation (CMB) found by the 2001-2003 WMAP showed a density fitting a ‘flat’ (not a curved) universe.

Sadly for Big Bangers ~73% of the positive energy to counterbalance gravity was still missing. What to do? Easy, bring in the mathigicians.

Wizards and magic

To arrive at zero energy to counterbalance the negative energy of gravity, and starting with ~4% of the needed matter, the mathigicians conjured up Dark Energy6. According to the equations, about ~73% of the universe must be composed of Dark Energy to make the Big Bang conform to 1a supernovae requirements (release of energy from a white dwarf event, itself a mathematical exercise). This invention then allows the universe to be 13.7 billion years old (so that it is older than the stars) and give enough energy to reach the needed ‘critical density’.

None of this is proven. It is all mathematical legerdemain. Michio Kaku, one of the more effervescent Big Bang-string violinists admits it is all made up:

“No one at the present time has any understanding of where this ‘energy of nothing’ comes from….If we take the latest theory of subatomic particles and try to compute the value of this dark energy, we find a number that is off by 10120.” 7

Kaku admits this ‘modern theory’ using quantum mechanics is ‘off by 10120’. This is 1 followed by 120 zeroes. If we compare this to 1 in a trillion, the maths are only off by: one hundred thousand quadrillion quadrillion quadrillion quadrillion quadrillion quadrillion quadrillion times larger than a trillion. Pretty accurate.

Kaku is referring to the discovery by Russian (oh the horror) physicist Yakov Zeldovich and later established in quantum electrodynamics (QED) or quantum field theory (QFT), that ‘empty space’ has an energy of 10120 more than the Dark Energy needed to propel the proposed ‘accelerating expansion of the universe’. In other words, the universe is full of energy (back to plasma, the aether and electromagnetism). Whoopsie.

The 10120 excess energy is the only source available but it cannot be cut up into slices. It is all or nothing. This is precisely why Big Bang advocates invented ‘Dark Energy’, the hopeful monster of energy that is more than the miniscule energy created by baryonic matter (protons, neutrons, atomic nuclei), but less than the 10120 excess energy given by quantum theory.

But there is a problem.

· The Big Bang theologists are completely wrong about the amount of matter and energy in space. There is too much matter to balance the ‘Dark Energy’ equations.

· Einstein’s General Theory of Relativity requires that all forms of energy (even the 10120) function as a source of gravity within curved spacetime.

· Einstein’s equations require that the ‘curvature’ of the universe depends on its energy content (Mach’s principle).

· Given the energy content is 10120 more than what Einstein proposed, the whole universe should presently be curled up into a space smaller than the dot on this i.

· Yet another problem was the time needed for the formation of stars and galaxies. Under Einstein-Friedman calculations it appeared that the age of the universe was younger than the age of its oldest stars!

‘The Science’ is ‘overwhelming and settled’! To make things better, many in ‘The Science’ now propose that Dark Energy is emitted from non-existing Black Holes, ‘holes’ which cannot emit anything, light, energy and radiation included (except when they do, see the ‘Hawking paradox’)! That makes sense.

Some claim that observations of class 1a supernovae, which are used as measuring devices for time and distance in Big Bang cosmology, indicate that the universal expansion is not slowing down pace Big Bang modelling and endless time (Friedman + Einstein), but speeding up. This suggests that there was no possibility this new acceleration (H2) could be accounted for by the present amount of energy and baryonic matter (Λ + Ω) in the Big Bang universe. In other words, what causes H2?

Of course such assumptions of acceleration are suffused with tautologies and interpretations. Many other data points suggest no acceleration and a smaller universe (eg WMAP, JWST). It should be emphasised that a redshift is simply a light spectrum and frequency issue. It does not mean the object is recessionary or moving away (many posts on this topic, example).

Bottom Line

Consider the Dark Energy mathigician show:

1. Bangers want a zero-energy sum because they believe it will answer the question concerning the origins of the Big Bang, with the answer it came from nada.

2. Einstein’s equations do not support an expanding universe-model so the equations which are tautological and inaccurate to start with, need to be amended and adjusted to fit the storyline.

3. Additions to Einstein’s maths completely overturn GTR and make it incoherent. Adding Dark Energy to Einstein’s equations make them invalid.

4. There is no proof of Dark Matter or Dark Energy, both are inserted to fulfill apriori conclusions pregnant with tautological assumptions and philosophies.

5. There is no possibility that the rates of expansion and quantity of matter at the explosion and initial expansion of the universe within the Big Bang model were so perfectly calibrated (less than 1 in a trillion-trillion-trillion if you apply probability theory).

Conclusion: The entire edifice of GTR and the Big Bang is built on mathimagicianism. Why is this taught as ‘fact’?

Little of what is in this post is debated or even known. Open ‘science’ and all that.

All hail.

===

1 New Secrets of the Universe,” Brian Greene, Newsweek, May 28, 2012, p. 23

Einstein’s Gμν ‒ Λgμν = 8πGΤμν is now the Big Bang’s Gμν = 8πGΤμν. + Λgμν.

The term Gμν is the curvature tensor, which is the geometry of Einstein’s ‘spacetime.’

The term Tμν is the stress- or energy-momentum tensor, which represents the precise distribution of matter and energy in the universe. In other words, the geometry of space is curved based on the amount of matter and energy it contains.

The term G is the universal gravitational constant. The term gμν is the spacetime metric tensor that defines distances.

The 8π is the factor necessary to make Einstein’s gravity reduce to Newton’s gravity in the weak or minimal field limit.

As it stands, in the equation Gμν = 8πGΤμν. + Λgμν, the Λgμν is Dark Energy and 8πΤμν is baryonic matter and Dark Matter.

Often the term Λgμν is replaced by pvacgμν, which more accurately represents the energy of the quantum vacuum, whereas Λgμν is more accurately General Relativity’s concept of spacetime.

2 Misner, Thorne and Wheeler, Gravitation, pp. 409-410: The only conceivable modification that does not alter vastly the structure of the theory is to change the lefthand side of the geometrodynamic law G = 8πT. The lefthand side is forced to be the Einstein tensor, Gαβ = Rαβ ‒ ½Rαβ

3 Misner, Thorne and Wheeler, Gravitation, p, 410

4 For a good analysis of Friedmann’s five equations, see http://nicadd.niu.edu/~bterzic/PHYS652/Lecture_05.pdf

5 Dark Energy: A Cosmological Constant?” http://map.gsfc.nasa.gov/universe/uni_matter.html

6 “Dark Energy: A Cosmological Constant?” http://map.gsfc.nasa.gov/universe/uni_matter.html

7 M. Kaku, Parallel Worlds, p. 12

I am terrible at math but I think I understand the points made in the article that if it doesn’t work we will do a mathematical magic trick so it will.

Modeling.

When I saw the word and after reading the article, it made me think of building a model car and claiming it was a real car might be a simple analogy, maybe ???

> You sit at your desk and torture maths equations until they scream that the 50 km is really 100 km. You convince yourself that you really cycled 100 km.

Even more accurately, you sit at your desk and claim that the 50km expanded to 100km while you were cycling it because of some magic operation of the road beneath your feet, but it's still the equivalent of 100km of cycling that you did. Then come up with math to model that, in support of your assertion.

This is a wonderful piece, Doc. This exact sort of "thinking" gets applied in all fields that use quanty methods. Desired-conclusion-justifying coefficients are the Leatherman multitool for so much of what passes for empirical truth. And it's so much easier, and more professionally rewarding, to gin up malarkey than to point it out/deconstruct it.